Data Pipeline tips and advice for getting started working with data

You are here because you have Data Pipeline, or you have the publicly available Data Pipeline data, and you are ready to get your hands dirty with some clean data!

Watch the Data Pipeline getting started video

Downloading Data Pipeline data using AWS CLI

Getting AWS CLI set up locally is very simple. Start here with these instructions from the AWS Documentation.

Setting up credentials (only necessary for accessing a private Data Pipeline S3 bucket, not the demo data public S3 bucket)

aws configure --profile parsely_dpl

AWS Access Key ID [None]: ENTER ACCESS ID

AWS Secret Access Key [None]: ENTER SECRET KEY

Default region name [None]: us-east-1

Default output format [None]: jsonDownload one file

Once AWS CLI is installed, it can be used to download a file from your Parse.ly Data Pipeline S3 bucket, or from the public Parse.ly S3 bucket with demo Data Pipeline data.

For the demo Parse.ly Data Pipeline data

aws --no-sign-request s3 cp s3://parsely-dw-parse-ly-demo events/file_name.gzFor your private customer-specific S3 bucket Make sure to use the profile flag

aws s3 cp s3://parsely-dw-bucket-name-here events/file_name.gz --profile parsely_dplDownload all the files

If you want to download all of the files in an S3 bucket, follow the below instructions. Caution, this might be a large amount of data!

For the demo Parse.ly Data Pipeline data

aws --no-sign-request s3 cp s3://parsely-dw-parse-ly-demo . --recursiveFor your private customer-specific S3 bucket Make sure to use the profile flag

aws s3 cp s3://parsely-dw-bucket-name-here . --recursive --profile parsely_dplCopy the data to your own S3 bucket

There are a few ways to do this! Using AWS CLI is a simple way to perform this task locally.

For the demo Parse.ly Data Pipeline data

aws s3 --no-sign-request cp s3://parsely-dw-parse-ly-demo s3://your-bucket-here --recursiveFor your private customer-specific S3 bucket Make sure to use the profile flag

aws s3 cp s3://parsely-dw-bucket-name-here s3://your-bucket-here --recursive --profile parsely_dplCopy the Data Pipeline data to Redshift or Google BigQuery

Parse.ly has made a Github repository specific to these use cases!.

The README.md in the repository linked above is going to have the most detailed information for how to install and use it best. Below are a few examples of how to use it for handy tasks once you have the parsely_raw_data repo installed.

Copy the S3 data to your Redshift database

This command will create an Amazon Redshift table using the specified Parse.ly schema and load the Data Pipeline data into the new table.

python -m parsely_raw_data.redshiftCopy the S3 data to your Google BigQuery

This command will create a Google BigQuery table using the specified Parse.ly schema and load the Data Pipeline data into the new table

python -m parsely_raw_data.bigqueryQuery the Data Pipeline data using AWS Athena

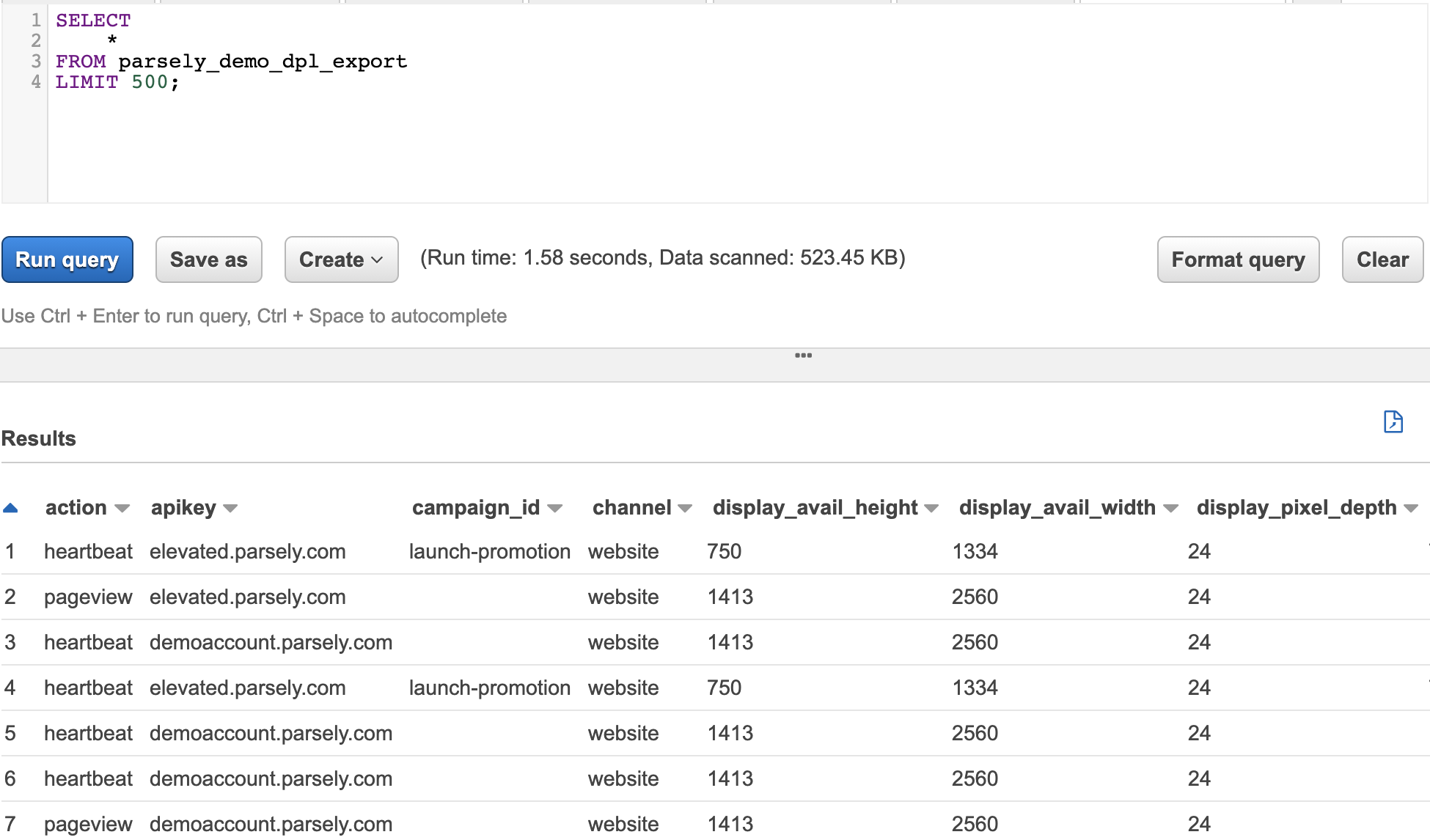

Don’t want to move any data? We got you covered! AWS Athena is a SQL interface that allows you to query S3 files directly.

- Create an Athena table using the Parse.ly Data Pipeline Athena schema

- Load the data into the recommended year-month partitions:

ALTER TABLE table_name_here ADD PARTITION (year='YYYY', month='MM') location 's3://parsely-dw-bucket-name-here/events/YYYY/MM'

- Use Athena to query the Data Pipeline data!

Getting started queries to answer common questions

These queries as formatted as if you are using Athena to query the Data Pipeline data.

Retrieve all records

This query will retrieve all records inside the Athena table that is reading from the S3 files. Please note that this will only retrieve partitions that have loaded (see section above). The more specific you are about a partition you are querying, the cheaper your Athena query will be.

select * from parsely_data_pipeline_table_nameBot traffic investigation

Bot traffic is getting smarter! To investigate the user agent and IP address to a specific post on a certain day, you can use the following query as a template

select

user_agent,

visitor_ip,

count(action) as pageviews

from parsely_data_pipeline_table_name

where

year = 'yyyy' and --this makes the query cheaper!

month = 'mm' and --this makes the query cheaper!

action = 'pageview' and

url like '%only-include-unique-url-path-here%' and

date(ts_action) = 'yyyy-mm-dd'Engaged-time by referrer type

This is a template query to retrieve engaged time by referrer category.

select

channel,

ref_category,

sum(engaged_time_inc) as engaged_time_seconds,

sum(engaged_time_inc)/60 as engaged_time_minutes

from parsely_data_pipeline_table_name

where

year = 'yyyy' and

month = 'mm'

group by 1,2

order by 3 descView conversions

Your conversions data is included in your Data Pipeline data! Use the template below to get you started with the conversions data.

select

*

from parsely_data_pipeline_table_name

where

year = 'yyyy' and --this makes the query cheaper!

month = 'mm' and --this makes the query cheaper!

action = 'conversion'Use dbt and a pre-formatted star schema to organize the Data Pipeline data in Redshift

A “data-build tool” for your Parse.ly data that automates SQL table creation for your data pipeline. It creates queryable tables for things like page views, sessions, loyalty users, subscribers, engagement levels, read-time, and handles the incremental loading of new data from S3 to your SQL tables. By handling this setup work for you, the data-build tool reduces configuration time and lets you get started writing your own custom queries more quickly. You can also find more information in our associated Github Repo: https://github.com/Parsely/parsely_raw_data/tree/master/dbt/redshift

How to get started

- Install DBT and requirements located in the main

/dbt/folder one level up.pip install -r requirements.txt - Edit the following files:

~/.dbt/profiles.yml: Input profile, Redshift cluster, and database information. Instructions can be found here in the dbt docs.settings/default.py: This is the one stop shop for all parameters that need to be configured.

- Test it by running

python -m redshift_etl. Ifsettings/default.pyfully updated, then no parameters are needed. However if arguments are given, those will override any settings indefault.py. - Schedule

redshift_etl.pyto run on an automated schedule (recommended daily)

Schemas/models

- Users Table Grain: 1 row per unique user ID defined by IP address and cookie. The table that provides the Parse.ly DPL lifetime engagement of a user including defining the user as Loyalty and Rolling 30 day loyalty.

- Sessions Table Grain: 1 row per user sessions. A session is defined as any activity by one user without being idle for more than 30 minutes. The session table includes total engagement and pageview metrics for the entire session as well as the user-types at the time of the session. This allows for simplified identification of conversions into loyalty users and subscribers.

- Content Table Grain: 1 row per article or video. This table contains only the most recent metadata for each article or video and aids in simplified reporting and aggregation when metadata is changed throughout the lifetime of an article.

- Campaigns Table Grain: 1 row per campaign. This table contains only the most recent description for each campaign.

- Pageviews Table Grain: 1 row per pageview. This table contains the referrer, campaign, timestamps, engaged time, and at-time-of-engagement metadata info for each pageview. The pageviews are organized to view the order and flow of pageviews within a session for one user.

- Videoviews Table Grain: 1 row per videoview. This table contains the referrer, campaign, timestamps, engaged time, and at-time-of-engagement metadata info for each video view. The videviews are organized to view the order and flow of videoviews within a session for one user.

- Custom events Table Grain: 1 row per custom event sent through the Parse.ly DPL. This is any event that is not: pageview, heartbeat, videostart, or vheartbeat. These can be specified in the dbt_project.yml file and contain keys to join to users, sessions, content, and campaigns.

Last updated: June 05, 2025